Chapter 6 Introduction to regression modelling

- the step by step procedure to obtain an informative linear model, assess its validity and interpret it

- Interpret results of a simple linear model

- Discuss significance of a simple linear model

6.1 Simple linear regression

A regression attempt to explain the variations observed for a variable by other variables

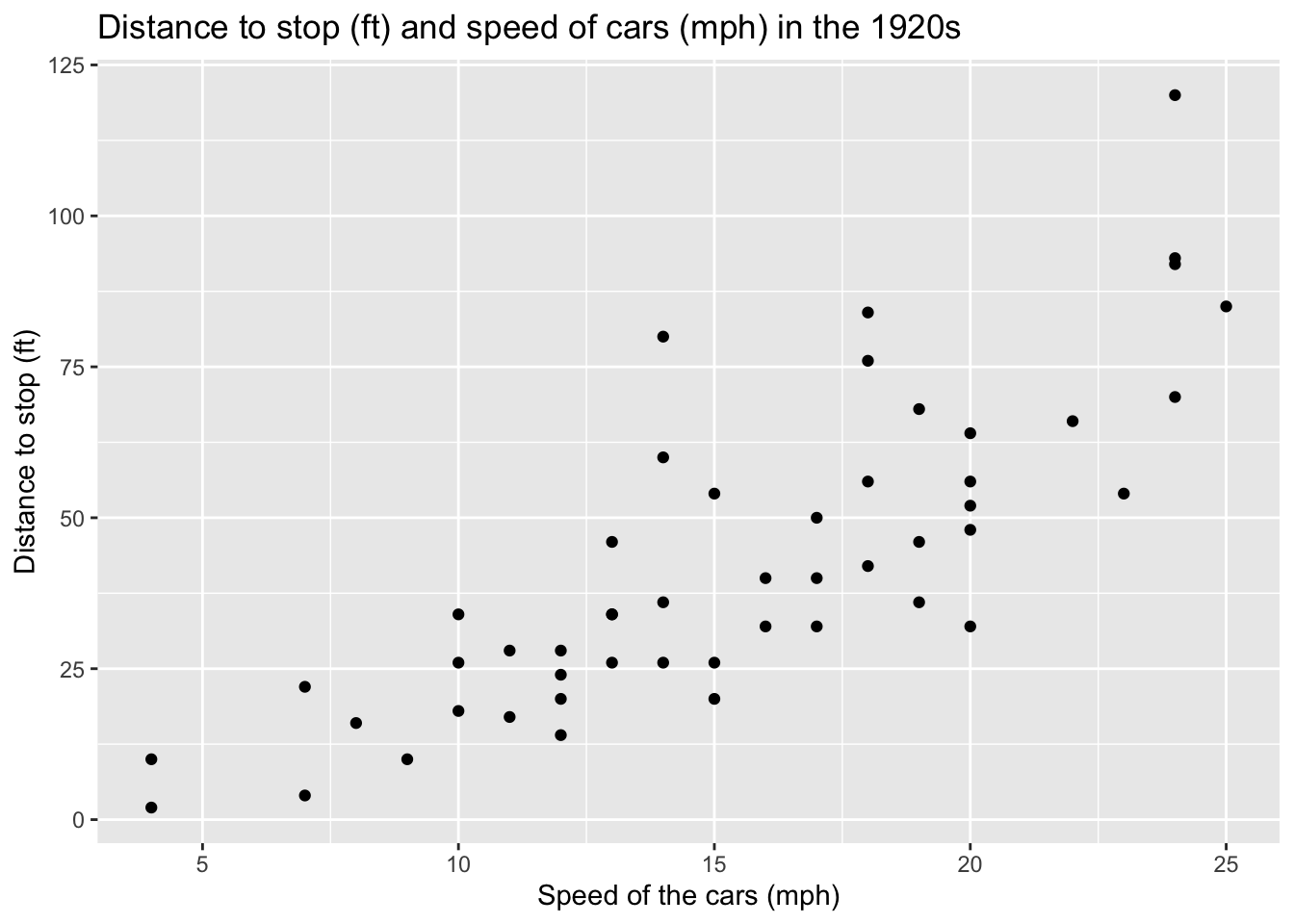

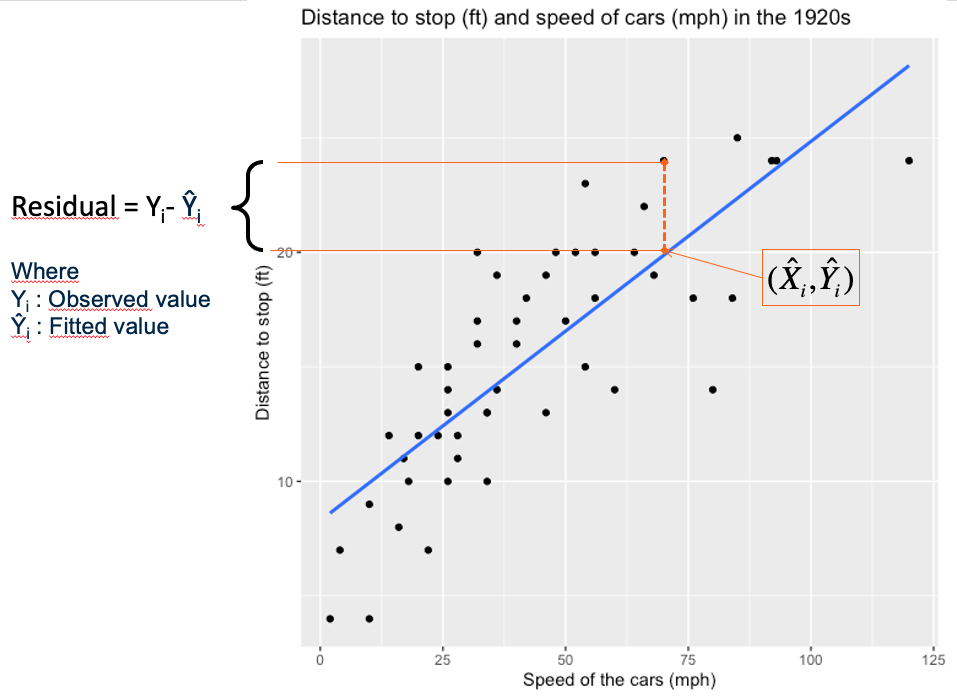

In the plot below, we try to explain the observed distances to stop for cars by the speed of the cars. Here between the two variables, we see a rather linear diagonal trend. We may be able to fit a simple linear regression model.

A simple linear regression model is a statistical model that attempt to fit a linear relationship between two variables: one to explain and one explanatory variable

By convention:

- Y axis presents the variable to explain also named the dependent variable, the outcome variable or the response variable

- X axis presents the explanatory variable also named the independent variable or the predictor variable.

A first measure of linear relationship, often wrongly used, is the coefficient of correlation.

6.1.1 Pearson’s coefficient of correlation

When we talk about correlation we often talk about the Pearson’s coefficient of correlation that quantify the linear association between two quantitative variables.

Mathematically, the coefficient of correlation is the standardized covariance (Equation (6.1)). While the covariance is the average the product of the deviations of observed values to their mean (Equation (6.2)).

\[\begin{equation} r = \frac{cov_x,_y}{s_xs_y} \tag{6.1} \end{equation}\]

\[\begin{equation} cov(x,y) = \frac{\sum{(x_i-\bar{x})(y_i-\bar{y})}}{N-1} \tag{6.2} \end{equation}\]

The coefficient of correlation r is :

- Dimensionless

- Range between -1 and 1

- its absolute value presents the force of the relationship

- its sign presents the direction of the relationship

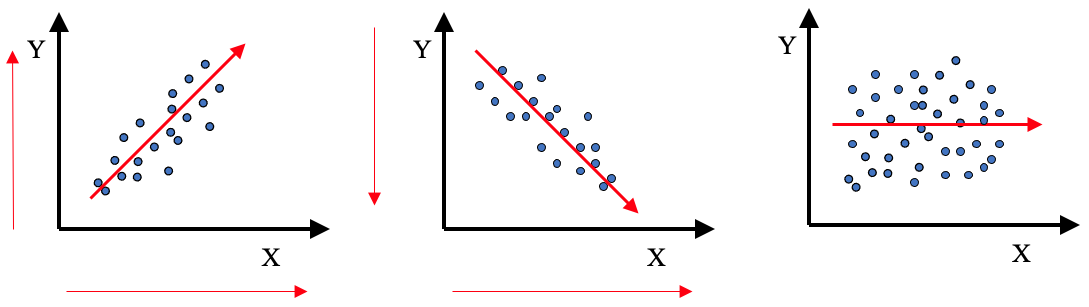

In the graphics below, what would be the estimated value of the Pearson’s coefficient of correlation?

Absence of linear association (r=0) does not mean absence of relation.

Correlation does not mean causation.

For details and other examples on too fast conclusions or spurious correlations see PennState Eberly College of Science (Statistics PennState - Eberly College of Science 2021) and Tyler Vigen’s Website (Vigen 2021).

6.1.2 Simple linear regression model

A simple linear model fit a line between the points of the two variables. The final equation is of the form:

\[\begin{equation} Y = \beta_1X + \beta_0 + \epsilon \tag{6.3} \end{equation}\]

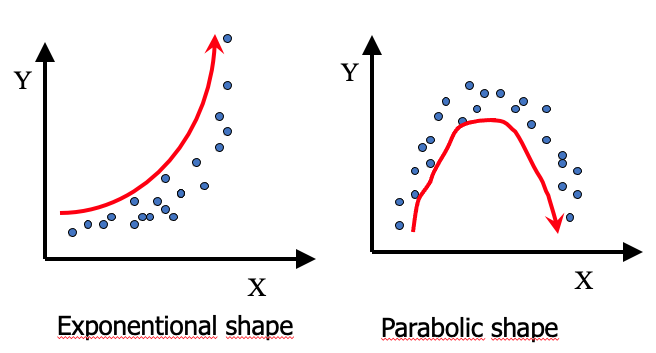

Which line fits best ?

Of all possible lines, the regression line is the one that is the closest on average to all the points.

The fitting of the regression line is based the least squares criteria. The aim is to minimize the sum of square distance between observed values and fitted values on the line (Equation (6.4)). The sum of square distance also named sum of square Error (SSE) or sum of square residuals (SSRes). When SSE is minimum it implies that the variance of the residuals that remain to be explained are fit to the minimum.

\[\begin{equation} SSE=\sum_{i=1}^{n}{(y_i-\hat{y_i})^2} \tag{6.4} \end{equation}\]

To fit a simple linear regression with R follow the example below:

##

## Call:

## lm(formula = dist ~ speed, data = cars)

##

## Residuals:

## Min 1Q Median 3Q Max

## -29.069 -9.525 -2.272 9.215 43.201

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -17.5791 6.7584 -2.601 0.0123 *

## speed 3.9324 0.4155 9.464 1.49e-12 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 15.38 on 48 degrees of freedom

## Multiple R-squared: 0.6511, Adjusted R-squared: 0.6438

## F-statistic: 89.57 on 1 and 48 DF, p-value: 1.49e-12The output tells us that:

- Y(distance to stop) = 3.93*X(speed) -17.57(intercept) + \(\epsilon\)

- If a car increases its speed by 1 mph, its distance to stop increases by 3.93 ft.

- This estimated coefficient is significantly different from 0 (\(Pr(>|t|) = p-value = 1.49e^{-12}\))

- The model explain 64% of the observed variance in distances to stop for cars (Adjusted R-squared or coefficient of determination \(R^2\)).

The residuals are leftover of the outcome variance after fitting a model. They are use to:

- Verify if linear regression assumptions are met {6.1.3}

- Show how poorly a model represents data

- Reveal unexplained patterns in the data by the fitted model

- Could help improving the model in an exploratory way.

6.1.3 Post-hoc assumptions verification

How to verify if a simple linear regression model was appropriate?

The method is descriptive and based on the distribution of the residuals that allows assessing:

- Linear and additive of predictive relationships

- Homoscedasticity (constant variance) of residuals (errors)

- Normality of the residuals distribution

- Independence (lack of correlation) residuals (in particular, no correlation between consecutive errors in the case of time series data)

Using R:

FALSE null device

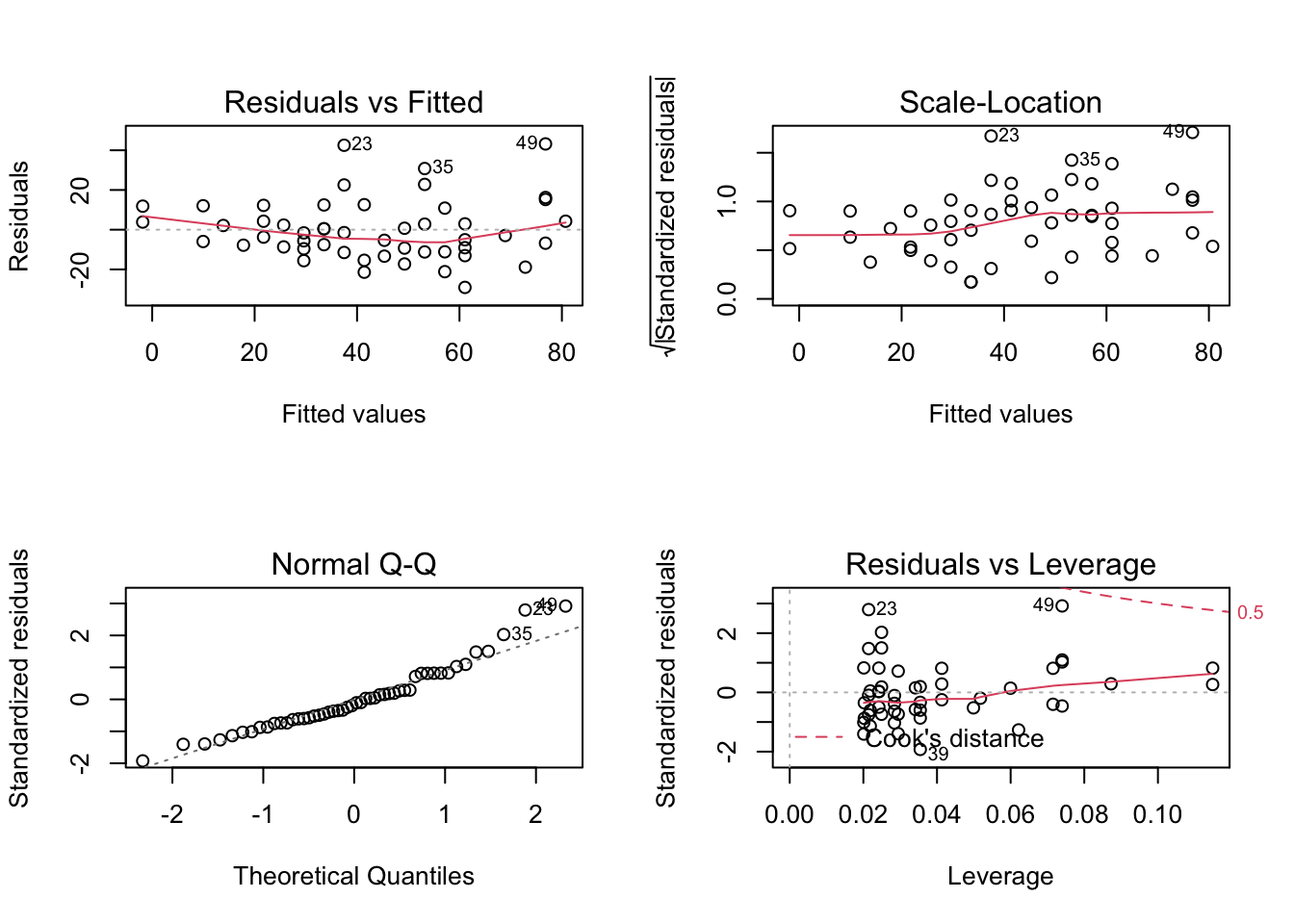

FALSE 1From top to bottom and left to right the plots assess :

- Linearity and additivity of predictive relationships. A good fitting should show a red horizontal line, the variance of the residuals is randomly spread above and below the horizontal line.

- Homoscedasticity (i.e. constant variance) of residuals (errors). A good fitting should show a red horizontal line, the variance of the residuals is constant and do not depend on the fitted values.

- Normality of the residuals distribution. A good fitting should show a alignment of the dots on the diagonal of the Q-Q plot.

- Influential observations with Cook’s distance: if dots appear outside the Cook’s distance limits (red dashes) they are influential observations or even outliers (extreme). Their value need to be verified as they might negatively influence the fitting.

What do you think of the model above?

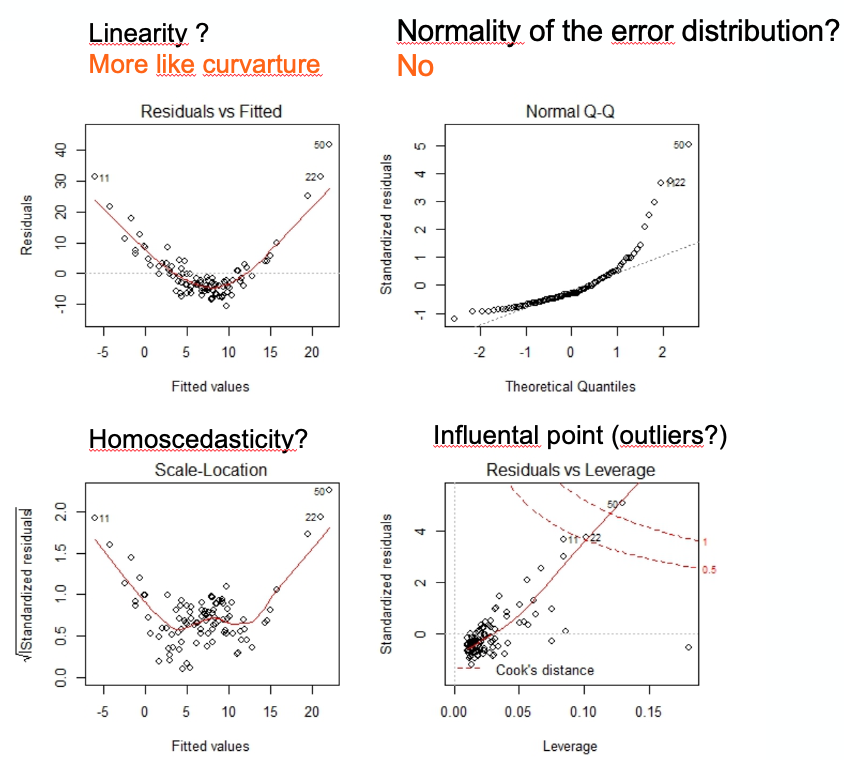

Below is an example of post-hoc assumptions verification of a linear model that shows problems. Here the model need to be refined or done differently.

6.2 Multiple linear regression model

A multiple (or multivariate) linear regression model is a statistical model that attempt to fit a linear relationship between one outcome variable to explain and several explanatory variables (determinants).

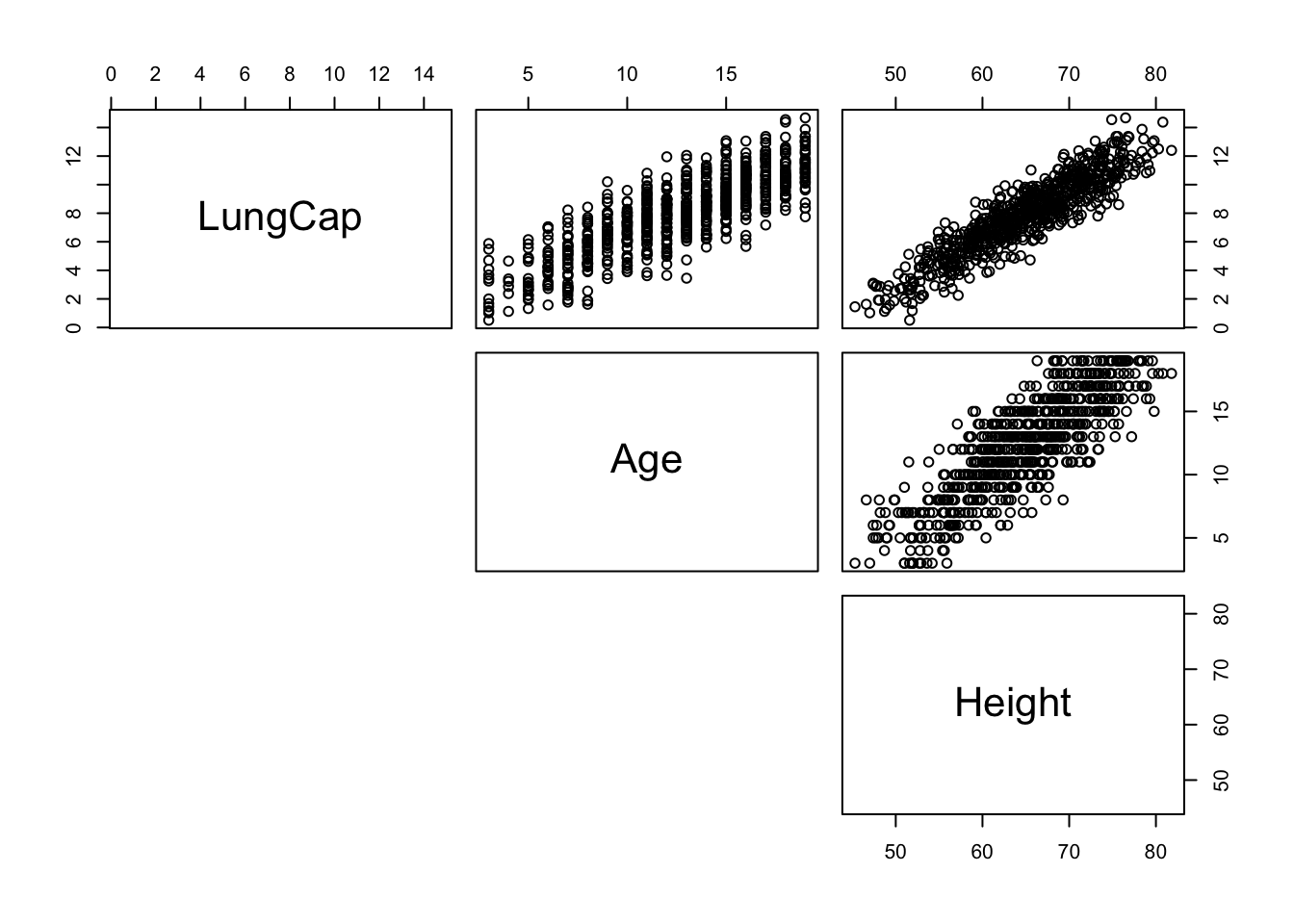

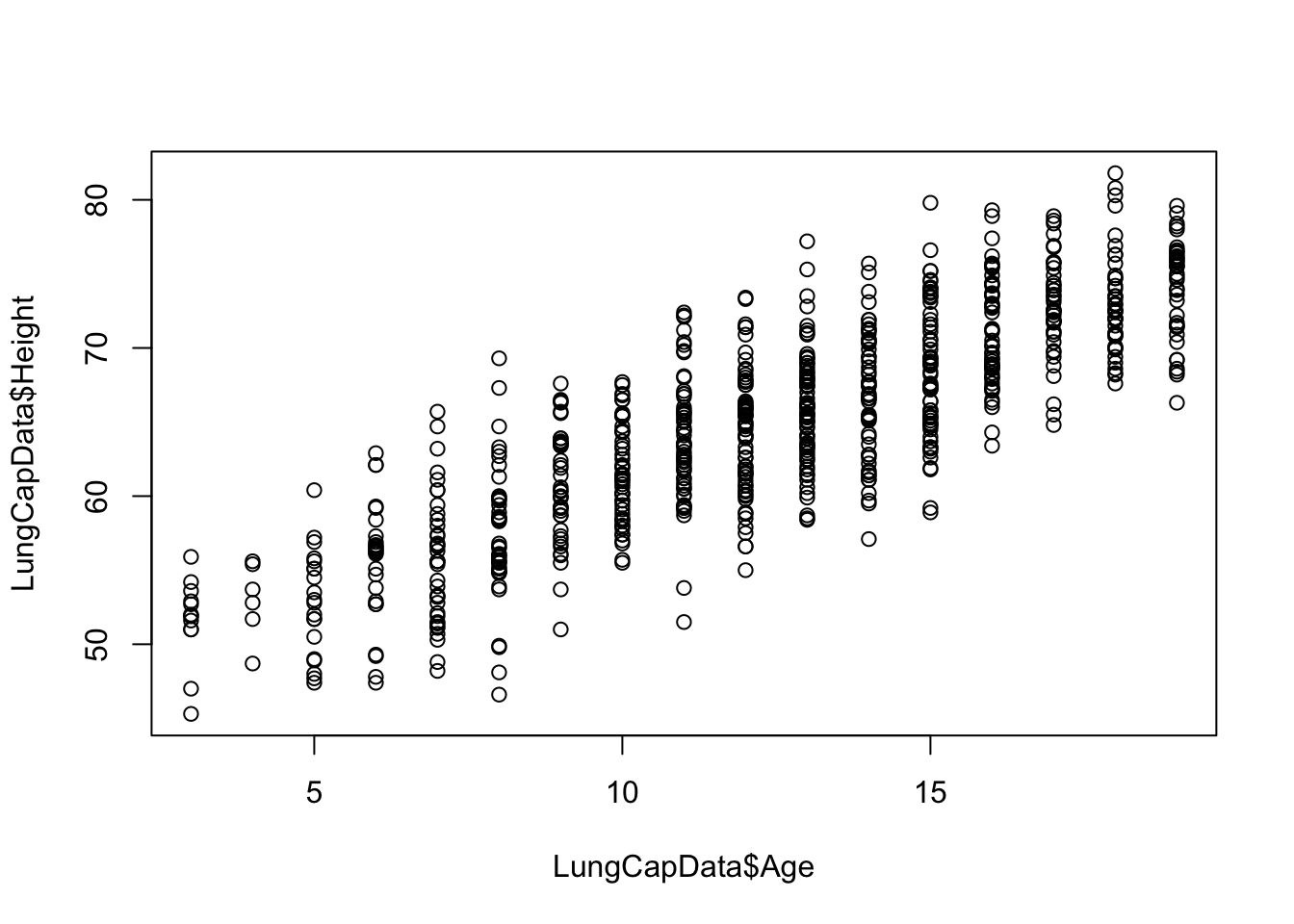

For instance, do your age and your height predict your lung capacity?

The table below presents the lung capacity dataset shared by Mike Marin and Ladan Hamadani and available at http://www.statslectures.com/ (Marin and Hamadani 2021). The first column is the lung capacity outcome variable and the other columns are some possible determinants.| LungCap | Age | Height | Smoke | Gender | Caesarean |

|---|---|---|---|---|---|

| 6.475 | 6 | 62.1 | no | male | no |

| 10.125 | 18 | 74.7 | yes | female | no |

| 9.550 | 16 | 69.7 | no | female | yes |

| 11.125 | 14 | 71.0 | no | male | no |

| 4.800 | 5 | 56.9 | no | male | no |

| 6.225 | 11 | 58.7 | no | female | no |

First, we have a look at the two first covariates along with the outcome variable using a pair plot to visually assess any linear relationship.

We could also look at each covariate against the outcome variable using a simple linear regression. However we will miss some information. Maybe it is better to be tall than small to have a large lung capacity whatever your age. To test this hypothesis we should apply a multivariate regression on the ouctome variable.

Mathematically, a multivariate regression is a generalization of a simple linear regression.

From one predictor …

\(y_i=\beta_0 + \beta_1x_{i1} + \epsilon_i\)

… to two or more predictors

\(y_i=\beta_0 + \beta_1x_{i1} + \beta_2x_{i2} + ...+ \beta_kx_{ik} + \epsilon_i\)

and the independent error terms \(\epsilon_i\) follow a normal distribution with mean 0 and equal variance \(\sigma^2\).

The R function calls for a multiple regression is similar to a simple linear regression. First you build the model adding up the covariate on the right hand side of the equation. Next you display the summary and interpret the coefficients.

##

## Call:

## lm(formula = LungCap ~ Age + Height, data = LungCapData)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.4080 -0.7097 -0.0078 0.7167 3.1679

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -11.747065 0.476899 -24.632 < 2e-16 ***

## Age 0.126368 0.017851 7.079 3.45e-12 ***

## Height 0.278432 0.009926 28.051 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.056 on 722 degrees of freedom

## Multiple R-squared: 0.843, Adjusted R-squared: 0.8425

## F-statistic: 1938 on 2 and 722 DF, p-value: < 2.2e-16- P-values for the t-tests appearing in the table of estimates suggest that the slope parameters for Age and Height are significantly different from 0 (\(p-value < 0.05\)).

- Residual standard error of 1.056 is rather small

- F-statistic (\(1938\)) is highly significant (\(p-value: < 2.2e-16\)) implying that the model containing Age and Height is more useful in predicting lung capacity than not taking into account those 2 predictors. (Note that this does not tell us that the model with the 2 predictors is the best model!)

- Multiple R squared: R-squared measures the amount of variation in the response variable that can be explained by the predictor variable. The multiple R-squared is for models with multiple predictor variables. When adding up predictors, the multiple Rsquared increases, as a predictor always explain some portion of the variance.

- Adjusted Rsquared: is the R-squared corrected for multiple predictors’ side-effect. It adds penalties for the number of predictors in the model. It shows a balance between the most parsimonious model, and the best fitting model.

\(adj R^2 = 1 - \frac{n-1}{n-(k+1)}.(1- R^2)\)

where n: size of the sample and k: number of independent variables

Generally, if you have a large difference between your multiple and your adjusted R-squared that indicates you may have overfitted your model.

In model3, when age increases by one year, the lung capacity increases by 0.12, all other things (height) being equal for the persons in the population. When height increases by one unit, the lung capacity increases by 0.27, all other things (height) being equal for the persons in the population.

Once a model is built, we can use it for prediction.

You can predict at the population level with the estimation of the confidence interval for the mean response

## fit lwr upr

## 1 11.53421 10.99061 12.07781We can be \(95\%\) confident that the average lung capacity score for all persons with age = 30 and height = 70 is between 10.99 and 12.07.

You can predict at the individual level using the prediction interval for a new response

## fit lwr upr

## 1 11.53421 9.390385 13.67804We can be \(95\%\) confident that the lung capacity score of an individual with age = 30 and height = 70 will be between 9.39 and 13.67

6.3 Logistic regression model

Logistic regression is a process of modeling the probability of a categorical outcome given an input variable.

The most common logistic regression is the binary logistic regression that models a binary outcome (ex: Dead/Alive, yes/no). Multinomial (or polychotomous) logistic regression can model scenarios where there are more than two possible modalities/outcomes (ex: low, medium, high) for the categorical variable.

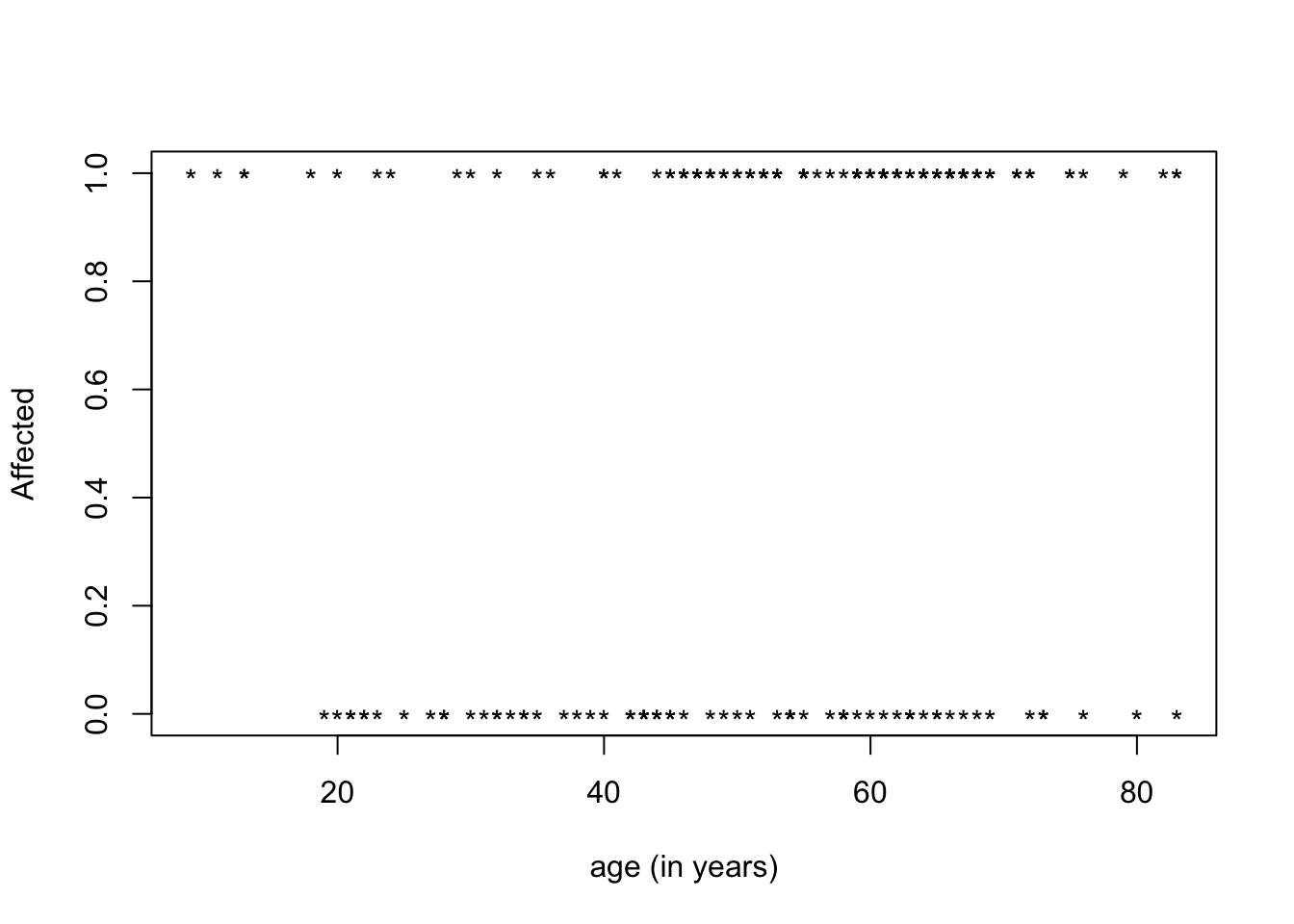

As an example, let’s investigate the risk factor of chronic kidney disease (CKD).The response variable is either 1 (Affected) or 0 (Not affected) - a dichotomous response. By definition, that’s a categorical response, so we can’t use linear regression methods to predict it.

When coding the outcome variable 0 and 1 we can still represent what is happening in association with a covariate such as age but we cannot draw an simple line.

We can see that the proportions (or probabilities) of having the disease must lie between 0 and 1. It corresponds to the prevalence (%) of CKD according to age.

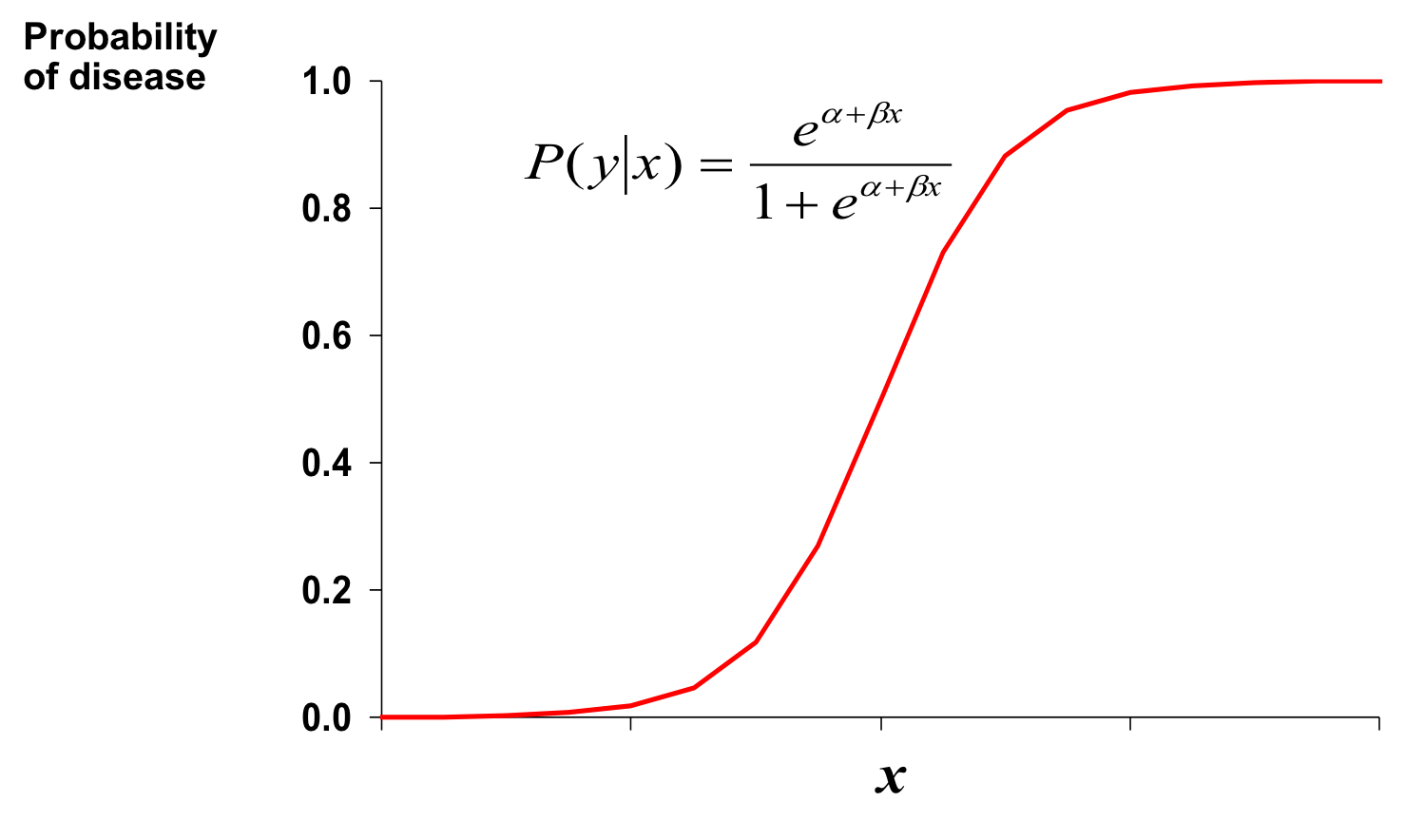

This relationship can be model using the Logistic function.

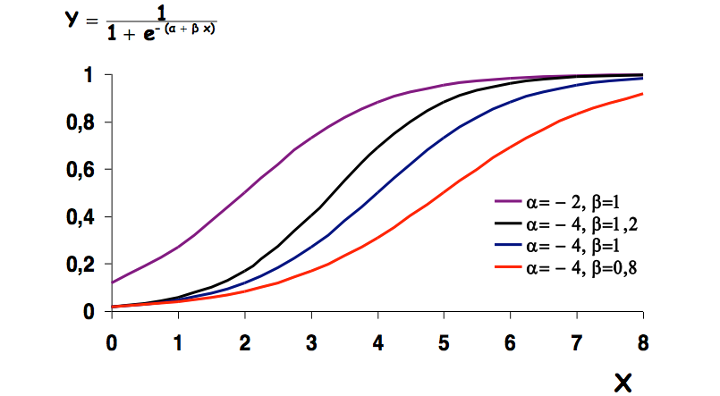

- \(Y = \frac{1}{1 + e^{-(\beta_0 + \beta_1X)}}\)

- \(\beta_0\) and \(\beta_1\) give the shape of the curve

We note the the \(\beta_0 + \beta_1X\) part looks really similar a linear model. In fact, to estimate those coefficients the mathematical trick is to transform the above equation in order to get to a linear form.

By transformation:

- First we compute the odds of the disease

\(Y/(1-Y) = \frac{1}{e^{-(\beta_0 + \beta_1X + \beta_2x_2 + ... +\beta_ix_i)}}\)

- Second we compute the Logit

\(ln(Y/1-Y)=\beta_0 + \beta_1x_1 + \beta_2x_2 + ...\beta_ix_i\)

\(\beta_0\) = log odds of disease in unexposed

\(\beta_i\) = log odds ratio associated with being exposed to i (age for instance)

The Logit function is defined as the natural log of the odds. A probability of 0.5 corresponds to a logit of 0, probabilities smaller than 0.5 correspond to negative logit values, and probabilities greater than 0.5 correspond to positive logit values.

After fit the model and estimating the \(\beta_0\) and \(\beta_1\) coefficients, we can retrieve the Odds Ratio (OR) which is the Probability of having the outcome / Probability of not having the outcome when exposed compare to the Probability of having the outcome / Probability of not having the outcome when unexposed :

OR= \(e^\beta_i\) = odds ratio associated with being exposed to i

# Can age contribute to the onset of the disease?

model_ckd <- glm(affected ~ age2, family=binomial("logit"), data=ckd)

summary(model_ckd) ##

## Call:

## glm(formula = affected ~ age2, family = binomial("logit"), data = ckd)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -1.8876 -1.2231 0.7832 0.9150 1.5734

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -1.198334 0.528806 -2.266 0.023444 *

## age2 0.033681 0.009818 3.431 0.000602 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 259.57 on 197 degrees of freedom

## Residual deviance: 247.03 on 196 degrees of freedom

## AIC: 251.03

##

## Number of Fisher Scoring iterations: 4The coefficient for age is positively link to the disease. When you increase in age you increase your risk of having disease. To compute the OR, we can use the \(exp(0.039)\) but for a nicest output with confidence interval we can use the epiDisplay library and its logistic.display() function.

# Loading the epiDisplay library in the R environment

library(epiDisplay)

logistic.display(model_ckd) ##

## Logistic regression predicting affected

##

## OR(95%CI) P(Wald's test) P(LR-test)

## age2 (cont. var.) 1.03 (1.01,1.05) < 0.001 < 0.001

##

## Log-likelihood = -123.5132

## No. of observations = 198

## AIC value = 251.0263The odd (risk) of having the disease is 1.04 times higher when people get older by 1 year (95%CI[1.02,1.06]). The P(Wald’s test) tells us that the estimated coefficient is different from 0 (or the OR is different from 1). The P(LR-test) log-likelihood ratio test tells us that the model is better than the NULL model (without any covariate).

We can add other covariates and then use a stepwise approach to get the most parsimonious model.

model_ckd2 <- glm(affected ~ age2 + bp.limit, family=binomial("logit"), data=ckd)

summary(model_ckd2)##

## Call:

## glm(formula = affected ~ age2 + bp.limit, family = binomial("logit"),

## data = ckd)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -1.9805 -1.1433 0.5753 0.9494 1.8686

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -1.87178 0.59109 -3.167 0.00154 **

## age2 0.03529 0.01034 3.414 0.00064 ***

## bp.limit 0.85816 0.22052 3.891 9.96e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 259.57 on 197 degrees of freedom

## Residual deviance: 229.70 on 195 degrees of freedom

## AIC: 235.7

##

## Number of Fisher Scoring iterations: 4model_ckd2<- step(model_ckd2)## Start: AIC=235.7

## affected ~ age2 + bp.limit

##

## Df Deviance AIC

## <none> 229.71 235.71

## - age2 1 242.42 246.42

## - bp.limit 1 247.03 251.03# The best model is with the smallest AIC

# It is the model without removing any variable (<none>)

logistic.display(model_ckd) ##

## Logistic regression predicting affected

##

## OR(95%CI) P(Wald's test) P(LR-test)

## age2 (cont. var.) 1.03 (1.01,1.05) < 0.001 < 0.001

##

## Log-likelihood = -123.5132

## No. of observations = 198

## AIC value = 251.02636.4 Collinearity

Collinearity is the correlation between 2 independent variables (predictors).

Multi-collinearity is cross-correlation between multiple independent variables (predictors).

If the independent variables are perfectly correlated, you will face these problems:

- Inflated coefficients

- Values and signs of the coefficients are incoherent with common knowledge

- Underestimated Student T test (non-significant p-values)

- Unsteady results, adding or deleting observation strongly modify the values and signs of the coefficients

6.5 Detecting (multi-)collinearity

6.5.1 Coefficient of correlation and visual assessment

Is there a problem in our example? Is Age too highly correlated with height?

A test over the coefficient of correlation can be performed where:

- H0: the coefficient of correlation r = 0

- H1: the coefficient of correlation r != 0

with \(\alpha < 0.05\)

##

## Pearson's product-moment correlation

##

## data: LungCapData$Age and LungCapData$Height

## t = 40.923, df = 723, p-value < 2.2e-16

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.8123578 0.8564338

## sample estimates:

## cor

## 0.8357368Note that even a small correlation between 2 variables and thus the test over the coefficient of correlation statistically significant.

6.5.2 Variance Inflation Factor

Therefore other metrics can be computed. The most commun one is the Variance Inflation Factor or VIF and its inverse the Tolerance indicator or TOL

Variance Inflation Factor (VIF): how much of the inflation of the standard error could be caused by collinearity

Tolerance indicator (TOL) = \(\frac{1}{VIF}\)

If all of the variables are orthogonal to each other (uncorrelated with each other) both indicators (TOL and VIF) are 1.

If a variable is very closely correlated to another variable, the TOL goes to 0, and the VIF gets very large. An independent variable with a VIF > 10 (empirical threshold) should be look at and some remedial measures might have to be taken.

Using the R mctest library and the imcdiag() function on your model you will get the VIF and TOL metrics and others. See help for interpretation of the others measures.

##

## Call:

## imcdiag(mod = model3)

##

##

## All Individual Multicollinearity Diagnostics Result

##

## VIF TOL Wi Fi Leamer CVIF Klein IND1 IND2

## Age 3.3163 0.3015 1674.66 Inf 0.5491 -1.0332 0 4e-04 1

## Height 3.3163 0.3015 1674.66 Inf 0.5491 -1.0332 0 4e-04 1

##

## 1 --> COLLINEARITY is detected by the test

## 0 --> COLLINEARITY is not detected by the test

##

## * all coefficients have significant t-ratios

##

## R-square of y on all x: 0.843

##

## * use method argument to check which regressors may be the reason of collinearity

## ===================================6.5.3 Remedial measures

The remedial measures to manage collinearity could be :

- Manual exclusion: VIF values above 10 and as well as the concerned variables logically seem to be redundant. You remove one of them.

- Aggregation: The two redundant variables could be summarized into an third variable using statistical methods such as Principal Component Analysis (PCA). That third variable will then be used in the model in place of the first two.

- Automatic variable selection: you let the modeling algorithm able the issue using stepwise regression which might remove variables that generate unstable modeling results

6.6 Explanatory variable selection

When defining a model you would like to either obtain the best explanatory model (goodness of fit) or the best predictive model (parsimonious). To this aim you need to select the most relevant variables. If you add all the variables you have in hand it might be one too many. The number of degrees of freedom will decrease and as the consequence the residual variance could increase.

How to select the contributing variables?

- Some variables are correlated with redundancy of information: the variables with the effect the easiest to explain/express should to be kept.

- Univariate tests (e.g., outcome vs exposition) are highly recommended prior building the model. Results with a p-value > 0.25 or 0.20 (empirical cut-off) is less likely to contribute to explain the outcome in a larger model. You might not want to include it in the full model. However, note that even so a variable shows a p-value > 0.25 in an univariate test you might still want to force it in the model because of inconsistency with your prior knowledge (literature, own studies).

- Automatic and iterative procedures for explanatory variable selection based on goodness of fit criterion (e.g., on Akaike’s information criterion - AIC)

6.6.1 Iterative procedures for explanatory variable selection

These approaches introduced or suppressed variables based on results of nested models tests using goodness of fit criterion. The 3 variants are:

- Backward procedure

- First, all of the predictors under consideration are in the model

- One at a time is deleted the less significant (the “worst”)

- All the remaining variables make a significant contribution

- Forward procedure

- Begins with none of the potential explanatory variables

- Adds one variable at a time

- Stops when no remaining variable makes a significant contribution

- Stepwise procedure

- Modification of the forward procedure

- Drops variables if they lose their significance as other are added

The goodness of fit criteria is often the Akaike’s information criterion or AIC. The smaller the AIC, the better the fit.

The R command for the stepwise approach is:

## Start: AIC=34.43

## LungCap ~ Age + Height + Smoke + Gender + Caesarean

##

## Df Sum of Sq RSS AIC

## <none> 747.78 34.43

## - Caesarean 1 5.80 753.57 38.03

## - Smoke 1 24.35 772.13 55.66

## - Gender 1 24.55 772.33 55.85

## - Age 1 82.65 830.43 108.44

## - Height 1 716.54 1464.32 519.66Here at each iteration one variable is removed and compare to the full model. For example, the line -Caesarean indicates that the variable Caesarean is removed. The AIC of 38.03 is compared to the AIC 34.43 of the full model (\(<none>\) removed). The full model is more informative than the model without the variable Caesarean. The interpretation is the same for the rest of the variables.

##

## Call:

## lm(formula = LungCap ~ Age + Height + Smoke + Gender + Caesarean,

## data = LungCapData)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.3388 -0.7200 0.0444 0.7093 3.0172

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -11.32249 0.47097 -24.041 < 2e-16 ***

## Age 0.16053 0.01801 8.915 < 2e-16 ***

## Height 0.26411 0.01006 26.248 < 2e-16 ***

## Smokeyes -0.60956 0.12598 -4.839 1.60e-06 ***

## Gendermale 0.38701 0.07966 4.858 1.45e-06 ***

## Caesareanyes -0.21422 0.09074 -2.361 0.0185 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.02 on 719 degrees of freedom

## Multiple R-squared: 0.8542, Adjusted R-squared: 0.8532

## F-statistic: 842.8 on 5 and 719 DF, p-value: < 2.2e-166.6.2 Goodness of fit analysis

You can perform a goodness of fit analysis step by step using the anova() function call between 2 nested models [NOTE: do not mix-up anova() with aov() for the comparison of more than two means].

##

## Call:

## lm(formula = LungCap ~ Height + Smoke + Gender + Caesarean, data = LungCapData)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.3631 -0.7360 0.0290 0.7529 3.0710

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -14.162235 0.365323 -38.766 < 2e-16 ***

## Height 0.339699 0.005706 59.536 < 2e-16 ***

## Smokeyes -0.497653 0.132004 -3.770 0.000177 ***

## Gendermale 0.197766 0.080852 2.446 0.014683 *

## Caesareanyes -0.206444 0.095549 -2.161 0.031055 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.074 on 720 degrees of freedom

## Multiple R-squared: 0.8381, Adjusted R-squared: 0.8372

## F-statistic: 932.1 on 4 and 720 DF, p-value: < 2.2e-16All the variables in the model4var are significant. The anova() function compare the full model to the model4var.

## Analysis of Variance Table

##

## Model 1: LungCap ~ Age + Height + Smoke + Gender + Caesarean

## Model 2: LungCap ~ Height + Smoke + Gender + Caesarean

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 719 747.78

## 2 720 830.43 -1 -82.653 79.472 < 2.2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The 2 models differ (p-value < 0.05). The AIC index highlight the model with the best fit (smallest AIC); here it is the full model.

## df AIC

## modelFull 7 2093.888

## model4var 6 2167.896